by Kreisha Ballantyne

I have a friend, a pilot with much more experience than I, who once shared some valuable advice after sitting in the right-hand seat with me (a then low-hour pilot). ‘If your flight starts to sound like the first few lines of an ATSB report, it’s time to abandon the flight and examine your planning,’ he said.

It was one of those flights, and I’m glad it happened while I was with someone more experienced than myself. Upon departing an airshow, I had failed to make the correct taxi call. Backtracking the runway, I called the wrong runway direction and upon take off, the door popped open. I conducted a go-around and landed, deciding to make it a full stop. As I parked, I failed to apply the park brake adequately, only just preventing the aircraft from rolling into another.

Not one of these occurrences is a disaster on its own but added up—along with my own tendency to self-flagellate, which only serves to distract my focus from being present in the moment—they can amount to a serious accident.

My abandoned flight is prime example of James Reason’s Swiss cheese model in which human system defences are likened to a series of slices of randomly-holed Swiss cheese arranged vertically and parallel to each other with gaps in-between each slice. The holes represent weaknesses in individual parts of the system and vary in size and position in each slice. The system as a whole produces failures when holes in all the slices momentarily align, permitting ‘a trajectory of accident opportunity’, so that a hazard passes through holes in all of the defences, leading to an accident.

The Swiss cheese model is so often applied to aviation because it’s an industry in which systems are so vital that when they fail, they so often lead to disaster. The model springs from the understanding that there are at least four types of failure required to allow an accident to happen: failures due to organisational influences, ineffective supervision, pre-conditions and specific acts. In my case, several mistakes combined, adding up to the need for a return to the departure airfield and culminating in my failure to properly secure the aircraft. Fortunately, the outcome wasn’t any worse.

In addition to the Swiss cheese model is the University of Queensland’s Bowtie model, composed of a centre, left and right.

In the centre is the hazard, which is the activity being analysed (flying an aircraft in our case). Categorised under the hazard is the ‘top event’, which is the ‘knot’ in the centre of the bowtie. It is the thing you want to avoid, because it signifies the worst has happened: the hazard has asserted itself. A plausible, but highly undesirable top event would be ‘losing control in flight’.

On the left side of the bowtie are the threats. These are things that might contribute to the top event. In my example they could be ‘low-hour pilot’, ‘high traffic density at airshow’ and ‘pressure to get home’.

On the right side of the bowtie are the consequences. In my example these could have included, ‘wrong taxi call causes taxing incident’ ‘door popping open causes sudden altitude loss due to distraction’ and ‘parking brake misapplication causes damage to multiple aircraft’.

The bowtie method enables hazard analysis to be made visible and clearly displays the links between threats, preventative measures and consequences; but also, too, between measures that can mitigate the consequences of any incident. My incident ticked the boxes and lined up almost every hole in the Swiss cheese and the resulting hazards are a perfect illustration of the bowtie model.

So, let’s shift from my incident, which fortunately ended well, to an accident that highlights the importance of safety systems. There’s a subtle difference here—and it’s vital to be aware that for an accident not to happen, all elements of the system must be in place. Rather than focusing on the pilot’s actions, I’d like to examine the safety systems failure that led to this particular accident.

What follows is the synopsis of the ATSB’s final report Collision with terrain involving Cessna 182, VH-TSA, at Tomahawk, Tasmania, on 20 January 2018 which describes a fatal aircraft accident at Tomahawk, Tasmania.

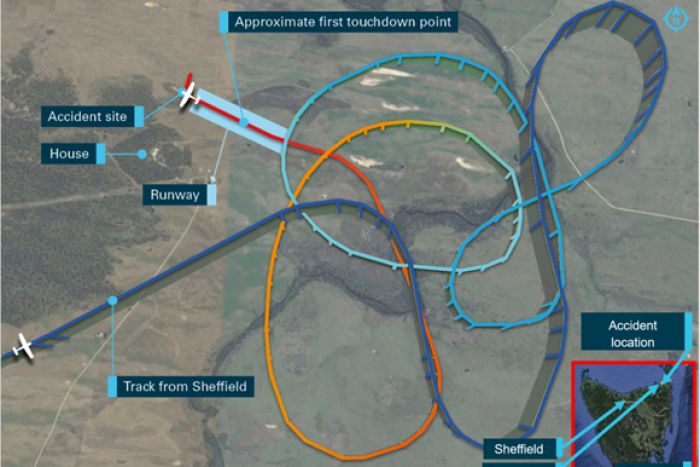

On 20 January 2018, the pilot of a Cessna 182P aircraft, registered VH-TSA, departed The Vale Airstrip, Sheffield, for a private airfield at Tomahawk, Tasmania. On arrival, the pilot conducted a number of orbits prior to approaching the runway. The aircraft touched down more than halfway along the runway before bouncing several times. In response, the pilot commenced a go‑around but the aircraft collided with a tree beyond the end of the runway and impacted the ground. The passenger was fatally injured and the pilot sustained serious injuries.

Using a combination of both models above, we can examine the safety systems’ failure using these categories:

Threat: which include preconditions, supervisional and organisational factors.

Consequence: the specific acts that led to the accident.

Hazard: the centre of the ‘knot’, or accident which is the outcome of the threats leading to the consequences which lead to the hazard.

This accident highlights that while the failure of one part of the system alone may not have necessarily led to tragedy, the combination of multiple factors allowed the flight of a capable crew and serviceable aircraft to end in disaster.

One: Hard to find airport leads to poor approach

Report conclusion: Analysis of the recorded flight track information identified that the pilot of TSA did not join a leg of the circuit or establish the aircraft on final approach from at least 3 NM. The pilot did not conduct a stabilised approach, which combined with the tailwind, resulted in the aircraft being too high and fast and a bounced landing well beyond the runway threshold.

Threat: The airport was hard to identify.

Consequence: When identified, the aircraft was not in a position for the pilot to readily join on a standard leg of the circuit.

Hazard: The pilot’s approach wasn’t stabilised.

Two: Time and weather pressures

Report conclusion: The pilot stated that there was pressure to land due to the weather, with clouds at about 1400 ft and light showers of rain in the area. Additionally, it was later than their original estimated arrival time of 1700.

Threat: Pressure to land due to both time and weather.

Consequence: The pilot was determined to land, despite flight conditions that might, ordinarily, suggest otherwise.

Hazard: A landing that was too fast, with a tailwind and inadequate runway to go-around.

Three: Weather

Report conclusion: The pilot reported that he did not identify the forecast difference in wind direction between 5000 and 1000 ft and commented that he found the grid point wind and temperature graphical information provided by the BoM more difficult to interpret than the text format used until November 2017. An experienced pilot who had operated numerous times at the airfield reported that the location was frequently affected by a sea breeze.

Threat: In the absence of a wind-sock, the pilot deduced the wind direction to be the same as the en route wind.

Consequence: The aircraft landed with a significant tailwind.

Hazard: As a result, so much runway was used that a go-around was not possible.

Four: Planning

Report conclusion: Based on the landing distance chart in the Pilot’s Operating Handbook (POH), the total distance required for the Cessna 182P to clear a 50 ft obstacle when landing at sea level pressure altitude in nil wind, on short dry grass at 30°C was 1648 ft (502 m). Therefore, in nil wind conditions, there was sufficient length available for a landing and to clear a 50 ft obstacle on the runway used by the pilot at sea level pressure in nil wind.

The POH stated that a 50 per cent increase in landing distance was required with a tailwind up to 10 kt. In this instance, that equated to a required distance of 2472 ft (753 m). Therefore, if the POH guidance was followed, the longest available runway length at the airfield was too short for landing with a 10-kt tailwind.

Threat: The plan for landing failed to consider the excessive tailwind.

Consequence: The pilot was forced to attempt to go-around on a runway that was too short for the conditions.

Hazard: The unsuccessful landing resulted in a collision with a tree.

Five: Miscommunication

Report conclusion: The airfield owner, also a pilot, reported that he was concerned that the pilot of TSA was attempting to land the aircraft towards the west, which would result in a tailwind he estimated to be about 15 kt. In response, he drove his vehicle onto the runway towards the approaching aircraft, with headlights on and hazard lights flashing, in an attempt to communicate to the pilot to abort the landing. The pilot reported that he thought the driver was indicating where to land, and so he continued the approach.

Threat: The pilot’s prior research of the airport did not include the possibility of signals from people on the ground and the attempted landing continued.

Consequence: The signal from the airstrip owner was misinterpreted as guidance to continue to land, rather than warning not to do so as was intended from the ground.

Hazard: The landing continued in unsuitable flight conditions, at least partly as a result of this miscommunication.

Six: Peer pressure and decision making

Report conclusion: The pilot reported that, following the bounced landing, the passenger instructed him to initiate a go‑around. In response, he applied full power and recalled that the engine appears to have responded normally.

Additionally, the pilot reported that the passenger stated she ‘would get the flaps’, during the go‑around and he assumed that she had selected the flap lever to the 10° position. Examination of the wreckage identified that, while the flap lever was in that position in the cockpit after impact, measurement of the flap actuator showed that the flaps were still in the fully extended position. Given that discrepancy, the ATSB concluded that either the lever had not been selected up for sufficient time to enable the flaps to start to retract before the aircraft collided with the tree, or the lever moved during the accident sequence.

Threat: The passenger, who was the more experienced aviator of the two, initiated the go-around and asserted control of the flaps.

Consequence: The pilot went around, and it seems possible that excessive flap hindered the aircraft’s climb.

Hazard: The aircraft struck a tree that was in the path of the degraded climb.

This accident clearly demonstrates the need to check off each element of the safety system, in this case:

- obtaining all relevant information about the local conditions, including wind direction and strength, prior to commencing an approach to an aerodrome.

- conducting a standard approach, which enables assessment of the environmental and runway conditions and allows checks to be completed in a predictable manner.

- initiating a go-around promptly, and ensuring the aircraft is correctly configured.

The ATSB concluded that the causes of the accident were multiple. These were:

- the selected approach direction exposed the aircraft to a tailwind that significantly increased the groundspeed on final approach and resulted in insufficient landing distance available.

- the final approach path was not stable. In combination with the tailwind, that resulted in the aircraft being too high and fast with a bounced landing well beyond the runway threshold.

- the go-around was initiated at a point from which there was insufficient distance remaining for the aircraft to climb above the tree at the end of the runway in the landing flap configuration and tailwind conditions.

This accident highlights the reasons why it’s so important to remain aware that for an accident not to happen, all elements of the system must be considered, actioned and confirmed in place.

Comments are closed.