Knowing how the machine works is widely accepted to be essential for safe flight. Recent developments in neuroscience mean this principle can now be applied to the most complex and inscrutable component in any manned aircraft—the pilot.

In this century technical progress of brain imaging has revolutionised our understanding of the brain. We know more than ever about the brain structures and functions behind our senses, thoughts and movements.

A new hybrid discipline of neuroergonomics, combining neuroscience, cognitive engineering and human factors, has emerged. It examines human-technology interaction in the light of what is now known about the human brain.

Neuroergonomics applies to aviation in areas ranging from motor control, attention, learning, alertness, fatigue, workload, decision-making, situational awareness and anxiety.

Human performance and human error have traditionally been investigated empirically, with researchers drawing conclusions based on what people say or do. These methods have worked well enough to provide significant progress in some areas (the development of crew resource management, for example). But the opportunity to see and record what people are thinking is a step change from these methods. Twentieth century psychologists (and to a lesser extent, psychiatrists) regarded the mind as a black box, not in the aviation sense as a recording device, but as a mysterious object whose inner workings were unknowable.

‘We know a lot about behaviour, and these sophisticated techniques for visualising the brain are confirming what we know behaviourally,’ human factors specialist Professor Ann Williamson from the University of NSW School of Aviation says. ‘Frustratingly, much of this empirical knowledge has been known, but not acted on’.

Neuroscientists Frederick Dehais and Daniel Callan say cognitive neuroscience has ‘opened “the black box” and shed light on underlying neural mechanisms supporting human behavior’.

Dehais and Callan propose a bold manifesto for neuroergonomics: to combine the real-world research of human factors with the accuracy and rigour of laboratory science. This is becoming possible as the tools for looking at the brain become smaller and sharper.

The mind laid open: techniques of brain imaging

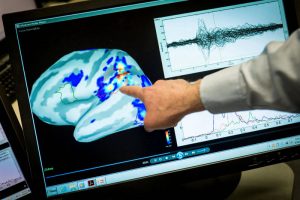

‘We’ve had the gross basics since the 1970s,’ says University of Sydney cognitive scientist associate professor Tom Carlson. ‘But our knowledge was limited to basic contrasts: is this brain area on or off? Now we can get into much more fine-grained detail about what that particular brain area might represent—objects, places or navigation?’

The brain’s activity can be measured indirectly, through functional near infra-red spectroscopy (fNIRS) or functional magnetic resonance imaging (fMRI) and directly by EEG and magnetoencephalography (MEG).

- Functional near infra-red spectroscopy (fNIRS) is a non-invasive optical brain monitoring technique that measures the flow and oxygenation of blood through the brain. It is spatially quite accurate but has low temporal resolution; time lags mean it is unable to detect rapid or transient changes in brain activity.

- Functional magnetic resonance imaging also indirectly measures brain activity by looking at blood oxygenation level changes. It has excellent spatial resolution of brain activity but like fNIRS, it has slow temporal resolution of several seconds, limited by the speed of blood changes in the brain.

- Electroencephalography (EEG) measures voltage fluctuations resulting from ionic current within the neurons of the brain using electrodes attached to the scalp. The technique, used since the 1920s, has excellent temporal resolution but poor spatial accuracy.

- Magnetoencephalography (MEG) directly measures magnetic fields generated by large groups of similarly oriented neurons. Its temporal resolution is fast (about 1 millisecond). Because skin, bone and cerebral spinal fluid are effectively transparent to magnetic fields, MEG can be more spatially accurate than electroencephalography (EEG). Spatial resolution lower than 1 cm can be achieved.

FMRI and MEG are the most accurate brain scanning techniques but have the disadvantage of requiring large, expensive and fixed facilities, involving shielded rooms. Subjects also have to keep their heads still. However, researchers have combined these brain-imaging methods with realistic flight and driving simulations to investigate neural activity involved with flying or driving.

MEG, at least, may become more portable in future. Richard Bowtell from the University of Nottingham and his colleagues have designed a portable MEG device that is worn like a helmet, allowing people to move freely during scanning.

Brain scientists are getting around the limitations of each technique by using them in combination. French researcher Dehais describes using a combination of experiments, sometimes combining well-controlled protocols in high-resolution devices such as fMRI and MEG, with low fidelity personal computer-based simulators. He also describes using motion based high-fidelity flight simulators in combination with portable but lower resolution brain recording devices such as fNIRS and EEG, and eventually conducting experiments in real flight conditions using the same portable devices.

The pilot brain

Among the insights of neuroscience is that pilots’ brains are subtly different from those the general population. This they have in common with musicians, who have grey matter volume differences in motor, auditory and visual spatial regions and along with London taxi drivers, who develop an enlarged hippocampus after years spent studying the more than 300 routes required the for Knowledge of London exams.

Callan and colleagues ran an fMRI experiment in 2013, looking at execution and observation of aircraft landings. They found that pilots showed greater activity than non-pilots in brain regions involved with motor simulation thought to be important for imitation-based learning. In 2014, Ahamed, Kawanabe, Ishii & Callan found glider pilots compared to non-pilots showed higher grey matter density in the ventral premotor cortex thought to be a part of the ‘Mirror Neuron System’.

Interestingly, pilots also showed greater brain activity when observing their own previous aircraft landing performance versus another person’s aircraft landing performance.

Research results

What alarm? Deafness beyond inattention

Neuroscience is confirming aspects of pilot behavior that previously had been inferred from experience, or, occasionally accident reports. One result of concern is that under stress pilots literally do not hear cockpit alarms.

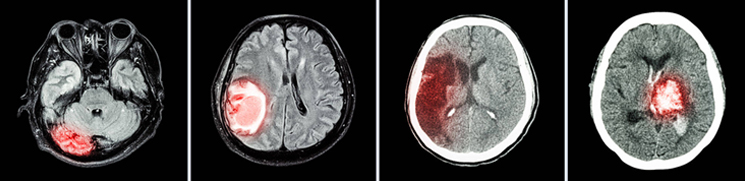

In 2017, Dehais, Callan and colleagues sent pilots through a simulated Red Bull style pylon race while they were being monitored in a fMRI system. Aural warnings rang out at intervals during the race.

The results revealed that pilots missed about 35 per cent of the alarms, but more interestingly, the fMRI analysis revealed that auditory misses relative to auditory hits yielded greater differential activation in several brain structures involved with an attentional bottleneck. ‘These latter regions were also particularly active when flying performance was low,’ Dehais and Callan write, ‘suggesting that when the primary task demand was excessive, this attentional bottleneck attenuated the processing of non-primary tasks to favor execution of the visual piloting task. This latter result suggests that the auditory cortex can be literally switched off by top-down mechanisms when the flying task becomes too demanding.’

A 2016 experiment by Dehais and colleagues put seven participants in a motion flight simulator facing a critical landing situation with smoke in the cabin requiring an emergency night landing in adverse meteorological conditions. The pilots also had to ignore a low-pitched tone but report a high-pitched alarm. Prior to the experiment, the volunteers were screened to determine if they were ‘visual’ or ‘auditory’ persons.

The pilots missed 56 per cent of the auditory alarms. Interestingly, the pilots who were screened as ‘visual dominant’ were more likely to ignore and miss the alarms.

The researchers also found that missed alarms occurred after 100 milliseconds, well before the emergence of awareness (300 milliseconds). Taken together, the results appealed in favor of an early and automatic visual to auditory gating mechanism that literally shut down their hearing.

Callan and Dehais (2018) conducted a third experiment in actual flight conditions to improve understanding of the neural mechanisms underlying alarm misperception.

VFR pilots flew a light aircraft while hooked up to an EEG system. In flight, they had to face a demanding flying task while responding to an auditory alarm. The findings were consistent with the fMRI study; an attentional bottleneck activated and led to a desynchronisation of the auditory cortex, preventing accurate processing of the alarms.

Callan says, ‘This series of experiments represent typical illustrations of the neuroergonomics approach; from basic experiments conducted with high-definition measurement tools in the lab, to the measurement of cognition in realistic settings.’

An aircraft that reads the pilot’s mind

In the pulp novel and later feature film Firefox, a fictitious Soviet super plane was controlled by the pilot’s thoughts (which in the film version were telegraphed by star Clint Eastwood’s tough-guy facial expressions). In the real world, converting thought into action is a tough task, despite considerable research in the fields of prosthetics and computer games. ‘On the commercial side it’s often oversold,’ Carlson says. ‘They show you a very small window in demonstrations but it’s much less successful in real world use.’

A 2016 experiment investigates a different way to harness a pilot’s impulses. Callan, Terzibas, Cassel, Sato and Parasuraman used fRMI and MEG to record brain activity during a flight simulation task. The first phase of the experiment involved detecting brain signals associated with the pilot’s intention to move the control stick. In the second half of the experiment the pilot flew a twisting course through the Grand Canyon. When the MEG detected the stick moving signal (for elevator deflection) in the pilot’s brain, it initiated the movement itself.

‘Our goal is to develop a system that enhances performance to super human levels during normal hands-on operation of an aeroplane (vehicle) by reducing the response time by directly extracting from the brain the movement intention in response to a hazardous event,’ the scientists wrote in their report. The simulation found machine detection of pilot intention reduced control response speed from a mean of 425.0 milliseconds to 352.7 milliseconds, a mean time saving of 72 milliseconds, without any additional stress or workload on the pilots. The authors mused that ‘it would be interesting to determine if the pilot notices the engagement of the neuroadaptive automation or rather just feels that they are really fast in reacting.’

Knowing me, knowing you: the neuroadaptive flight deck

Dehais and Callan suggest it may be possible to develop neuroadaptive flight decks to reduce workload on pilots and help them process critical information.

An aircraft that can detect when its pilots are overloaded or distracted could take action to regain their attention, for example. ‘It has been shown that switching off the displays for a very short period is an effective way to mitigate attentional tunnelling,’ they write.

They also propose ‘adaptive automation’ that automatically share tasks between human and automation to maintain a constant, acceptable and stimulating task load to the pilot.